#machine-readable text

Explore tagged Tumblr posts

Text

AI Tool Reproduces Ancient Cuneiform Characters with High Accuracy

ProtoSnap, developed by Cornell and Tel Aviv universities, aligns prototype signs to photographed clay tablets to decode thousands of years of Mesopotamian writing.

Cornell University researchers report that scholars can now use artificial intelligence to “identify and copy over cuneiform characters from photos of tablets,” greatly easing the reading of these intricate scripts.

The new method, called ProtoSnap, effectively “snaps” a skeletal template of a cuneiform sign onto the image of a tablet, aligning the prototype to the strokes actually impressed in the clay.

By fitting each character’s prototype to its real-world variation, the system can produce an accurate copy of any sign and even reproduce entire tablets.

"Cuneiform, like Egyptian hieroglyphs, is one of the oldest known writing systems and contains over 1,000 unique symbols.

Its characters change shape dramatically across different eras, cultures and even individual scribes so that even the same character… looks different across time,” Cornell computer scientist Hadar Averbuch-Elor explains.

This extreme variability has long made automated reading of cuneiform a very challenging problem.

The ProtoSnap technique addresses this by using a generative AI model known as a diffusion model.

It compares each pixel of a photographed tablet character to a reference prototype sign, calculating deep-feature similarities.

Once the correspondences are found, the AI aligns the prototype skeleton to the tablet’s marking and “snaps” it into place so that the template matches the actual strokes.

In effect, the system corrects for differences in writing style or tablet wear by deforming the ideal prototype to fit the real inscription.

Crucially, the corrected (or “snapped”) character images can then train other AI tools.

The researchers used these aligned signs to train optical-character-recognition models that turn tablet photos into machine-readable text.

They found the models trained on ProtoSnap data performed much better than previous approaches at recognizing cuneiform signs, especially the rare ones or those with highly varied forms.

In practical terms, this means the AI can read and copy symbols that earlier methods often missed.

This advance could save scholars enormous amounts of time.

Traditionally, experts painstakingly hand-copy each cuneiform sign on a tablet.

The AI method can automate that process, freeing specialists to focus on interpretation.

It also enables large-scale comparisons of handwriting across time and place, something too laborious to do by hand.

As Tel Aviv University archaeologist Yoram Cohen says, the goal is to “increase the ancient sources available to us by tenfold,” allowing big-data analysis of how ancient societies lived – from their religion and economy to their laws and social life.

The research was led by Hadar Averbuch-Elor of Cornell Tech and carried out jointly with colleagues at Tel Aviv University.

Graduate student Rachel Mikulinsky, a co-first author, will present the work – titled “ProtoSnap: Prototype Alignment for Cuneiform Signs” – at the International Conference on Learning Representations (ICLR) in April.

In all, roughly 500,000 cuneiform tablets are stored in museums worldwide, but only a small fraction have ever been translated and published.

By giving AI a way to automatically interpret the vast trove of tablet images, the ProtoSnap method could unlock centuries of untapped knowledge about the ancient world.

#protosnap#artificial intelligence#a.i#cuneiform#Egyptian hieroglyphs#prototype#symbols#writing systems#diffusion model#optical-character-recognition#machine-readable text#Cornell Tech#Tel Aviv University#International Conference on Learning Representations (ICLR)#cuneiform tablets#ancient world#ancient civilizations#technology#science#clay tablet#Mesopotamian writing

5 notes

·

View notes

Text

sooo happy!

#ultrakill#ultrakill fanart#ultrakill mirage#mirage ultrakill#chiikawa#sofmof#im still learnign how to draw machines sry if this is not thag good#also i hope the japanese text is. readable. i tried#i love merging hyperfixiations

1K notes

·

View notes

Text

I love this alternate universe "what if OD&D had been a shitty late 1980s zine game" thing a certain segment of the OSR crowd has going on, but my problem is that even when they're going out of their way to emulate the characteristic jank of the era, the production values are way too slick. If we're really aiming to capture the spirit of the times, where's the grotesque line breaking and the paragraphs that end in mid sentence? Where are the illustrations that were clearly drawn on line-feed printer paper in ballpoint pen, complete with visible edges where they were cut out and pasted into the master document? Where are the layouts where no two pages have the same margins? Where are the parts where the text is randomly canted at about a five degree angle off horizontal because somebody fucked up when feeding that particular page into the Xerox machine and couldn't be arsed to redo it? I want an end product that's barely readable, is what I mean to say.

745 notes

·

View notes

Text

Promotional Super Mario 64 poster from Silicon Graphics, the creators of the Nintendo 64 processors. The text on the bottom (barely readable when zoomed in) reads as following:

It's a little black box that can immerse you in exciting 3D virtual worlds, where you can search for treasure, explore underwater kingdoms, even fight an overweight dragon. It's also the hottest game machine on the market. Driven by 64-bit MIPS processors and RealityEngine 3D graphics technology, both from Silicon Graphics, the Nintendo 64 game machine provides a glimpse into our future - where incredibly powerful visual computing is accessible and easy to use.

Main Blog | Twitter | Patreon | Small Findings | Source: ryanslayer

646 notes

·

View notes

Text

Pancaketiffy's Squidbob comics in English (google drive link)

I've been able to track down almost all of the Squidbob comics created by Pancaketiffy in English! Huge thanks to @lake-fmoli for sharing a majority of these with me ^^

Included in this Google Drive link are the comics Squidward's Birthday Gift, Tiki Ceremony, Vacation, Nautical Disaster, Squelly, The Secret Formula, and The Fantastic And Expansive Life Of Squilliam Fancyson, as well as some mini-comics and miscellaneous art.

Tiki Ceremony had a few pages only in Spanish -- pages 30-33 and page 68 -- and since I know it's going to be VERY hard to find those original pages, I've taken an English translation I found and edited those remaining Spanish pages back into English; those pages I've labeled as "retranslated." I'll still be looking for those original pages, and I'm hoping I can find someone who has them, but for now this is the best way to complete the English version of the comic. (Thank you to the people who uploaded the Spanish-translated pages to a NSFW site along with accurate English translations, lol)

I have included two versions of Vacation -- the version where Pancaketiffy took out the NSFW pages, and the original version with the NSFW pages intact. The NSFW version has been labeled "EXPLICIT." The censored version was taken directly from an upload on Internet Archive -- so big thank you to the person who uploaded that! The only things missing from these are the English chapter covers.

Natural Disaster, Squelly, and The Secret Formula were never completed, and as far as I'm aware I've gathered all of the completed pages. I THINK it's the same for The Fantastic And Expansive Life Of Squilliam Fancyson, but I'm unsure. Secret Formula and the Squilliam comic were retrieved from a drive that I can't find anymore 😅 but thank you to whoever uploaded those in the first place.

Some pages are higher-quality than others, but I'm just glad I've found readable English versions of the comics in general. If anybody has or ever finds higher-quality pages, the original English pages of Tiki Ceremony, the English chapter covers for Vacation, or anything else that's missing, please send them to me. I'm gonna try to keep this thing updated, as well as figure out the best way to upload this stuff to Internet Archive.

Again, big thank you to everyone who saved and shared the art with me!

!!! UPDATE 5/24/2025: All the English pages for these comics have been found! As far as I know, at least. Now all I'm looking for regarding Tiffy's Spongebob art would be any higher-resolution images as well as any other missing pieces of art.

I have also decided to archive the rest of Pancaketiffy/Hysterysusie's other art, in a new folder titled Non-SB Art. This includes other fan-works and original series. I'm mostly looking to find more pages of her original comic Alan And The Final Star; this comic had over 100 pages, but I've only been able to find the first 16. I'm also interested in finding any art regarding her Sonic OC comic for Zati The Rebel, which I've only been able to find mentioned on the Wayback Machine. More info in the text file in the google drive.

#pancaketiffy#hysterysusie#squidbob#my post#i hope this doesn't come off as weird but this was going to eat me alive if i couldn't find all of this#i've seen people upset at how hard it is to find this stuff in english#and who just miss tiffy's art in general#she still is one of my fave internet artists even if she left a long time ago#i think i'm actually more upset that her original works are gone#but those are gonna be much much harder to find and are likely never gonna be uncovered again#but at least i was able to find this stuff

98 notes

·

View notes

Text

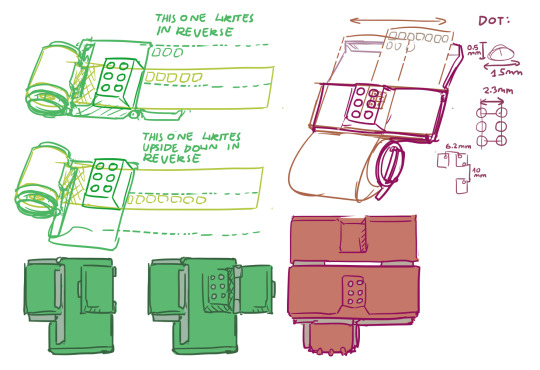

Please Reblog, I'd like to design a cheap braille typewriter (prototyping by 3d printing, final design will be machined) I stumbled upon linked YouTube short and a thought: "designing 6/8 button typewriter is within my technical capabilities"

youtube

I have many design questions I wish I could test out

My roadblock is I don't know anyone who's visually impaired, and casually seeking random place to peddle my soon-to-be-invention is not something I'm capable of

Many design questions:

Paper type: what is minimal quality/density of paper for dots to be readable. Can thermal receipt paper be used for notes?

Embossed vs punched out: is that significant for the typewriter to not break paper? It's important for "undo", but that's pretty far in my building a typewriter plan

Typewriter size: My initial idea was something like a portable cash register with receipt paper spool and little tray for it to glide along (I quickly realized it's a bad design because it can't fit more than 7 characters, or I can make infinite scroll of a single line with questionable ergonomics). Ultimately is related to page size, so what would be best for it? A4 standard paper? Is being portable important?

Keyboard layout: Perkins Brallier have all it's buttons inline forming long row. Wouldn't single-hand keyboard in similar layout as braille dots be more convenient? (straight grid or mimicking angle of computer keyboard letters)

Typing feedback: should typed letter be instantly accessible and not obstructed by typewriter? Maybe typing with one hand and instantly proof-reading with another hand?

Typewriter or printer?: alternatively, I can make a little annoying-noise-making servo-powered printer that will punch out text. Arduino or Raspberry PI based (I have experience with both) It would be USB powered most benevolent printer, because it don't require ink to work

Thanks for reading! [I'm not transcribing my design scribble, because it's absolute dogshit, but it helped me formula requirements. I will add transcription to actually thought of designs]

Alternatively, if I'm tweaking right now and if that thing would be needed it would already exist, I'll go back to trying to get hired by random megacorp and that's the last time you hear of me talking about it 💀

#braille#accessibility#inclusivity#blindness#blind#visually impaired#actually blind#low vision#visual impairment#Youtube

65 notes

·

View notes

Text

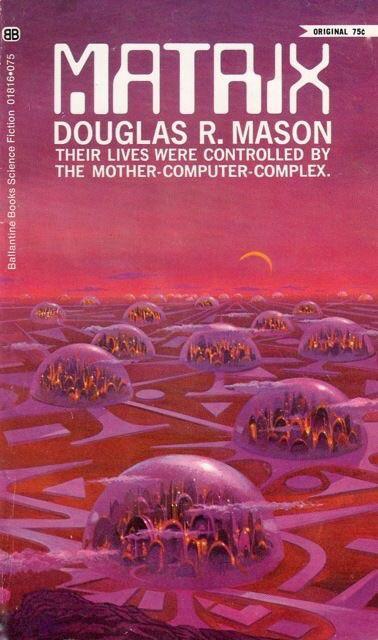

Machine-readable text goes all the way back to the 1950s, when the banking industry developed MICR (Magnetic Ink Character Recognition) to allow automatic processing of checks.

The system used a distinctive numeric font set that made it easy to read via magnetic sensors, while still being human readable as well. The style became associated with computers and futuristic technology and fonts inspired by MICR were a staple of 1960s and 70s Sci Fi movies and book covers.

527 notes

·

View notes

Text

Pretty regularly, at work, I ask ChatGPT hundreds of slightly different questions over the course of a minute or two.

I don't type out these individual questions, of course. They're constructed mechanically, by taking documents one by one from a list, and slotting each one inside a sandwich of fixed text. Like this (not verbatim):

Here's a thing for you to read: //document goes here// Now answer question XYZ about it.

I never read through all of the responses, either. Maybe I'll read a few of them, later on, after doing some kind of statistics to the whole aggregate. But ChatGPT isn't really writing for human consumption, here. It's an industrial machine. It's generating "data," on the basis of other "data."

Often, I ask it to write out a step-by-step reasoning process before answering each question, because this has been shown to improve the quality of ChatGPT's answers. It writes me all this stuff, and I ignore all of it. It's a waste product. I only ask for it because it makes the answer after it better, on average; I have no other use for it.

The funny thing is -- despite being used in a very different, more impersonal manner -- it's still ChatGPT! It's still the same sanctimonious, eager-to-please little guy, answering all those questions.

Fifty questions at once, hundreds in a few minutes, all of it in that same, identical, somewhat annoying brand voice. Always itself, incapable of tiring.

This is all billed to my employer at a rate of roughly $0.01 per 5,000 words I send to ChatGPT, plus roughly $0.01 per 3,750 words that ChatGPT writes in response.

In other words, ChatGPT writing is so cheap, you can get 375,000 words of it for $1.

----

OpenAI decided to make this particular "little guy" very cheap and very fast, maybe in recognition of its popularity.

So now, if you want to use a language model like an industrial machine, it's the one you're most likely to use.

----

Why am I making this post?

Sometimes I read online discourse about ChatGPT, and it seems like people are overly focused on the experience of a single human talking to ChatGPT in the app.

Or, at most, the possibility of generating lots of "content" aimed at humans (SEO spam, generic emails) at the press of a button.

Many of the most promising applications of ChatGPT involve generating text that is not meant for human consumption.

They go in the other direction: they take things from the messy, human, textual world, and translate them into the simpler terms of ordinary computer programs.

Imagine you're interacting with a system -- a company, a website, a phone tree, whatever.

You say or type something.

Behind the scenes, unbeknownst to you, the system asks ChatGPT 13 different questions about the thing you just said/typed. This happens almost instantaneously and costs almost nothing.

No human being will ever see any of the words that ChatGPT wrote in response to this question. They get parsed by simple, old-fashioned computer code, and then they get discarded.

Each of ChatGPT's answers ends in a simple "yes" or "no," or a selection from a similar set of discrete options. The system uses all of this structured, "machine-readable" (in the old-fashioned sense) information to decide what to do next, in its interaction with you.

This is the kind of thing that will happen, more and more.

742 notes

·

View notes

Text

'history of the hour' is honestly a great book and i'm so pleased i got myself a copy of it. it IS dense, but it's pretty readable even to me, who routinely struggles to absorb and comprehend academic texts. might be that it just hits a perfect spot for a very specific area of my interests (the evolution of how we read time)!

ANYWAY there is a section in the medieval hours chapter that i find very amusing called "some misconceptions". here gerhard dohrn-van rossum drags three other historians for claiming that benedictine monasteries were "the founders of modern capitalism" and playing up how machine-like and precisely ruled by the iron grip of Clocks monastery life was, when all evidence suggests that the monks were kinda chill about it and more about the Rhythms Of The Day rather than The Exact Hour.

"all three authors fail to distinguish between alarm devices, mechanical clocks, and striking clocks." scathing.

#clockblogging#i do indeed get to spend school time taking notes today!!!!#idk if anyone else finds this interesting but this is the fate of following my blog#i don't even need this tidbit for my thesis#another section in this book is titled 'setting time limits on torture'

57 notes

·

View notes

Text

Introduction and UtsuKare Translations Master Post

Some of you might recognize me as that Russian translator of Utsukushii Kare books from Wattpad. I decided to revive my tumblr to compile all the links and explanations here for those of you, My Beautiful Man fans, who can't wait for official English releases of the books.

I could never keep a blog, so for now here I'll just tell how it all came about, and you can find links to all my MBM translations at the end (feel free to just skip the wall of text).

So a couple of years ago I finally bowed down and decided to read Utsukushii Kare series in Japanese for language practice, even though I found the summary unappealing and I'm generally suspicious of overhyped media (as far as BL novels go, these books seemed to be The most hyped-up series in Japan). Much to my surprise, I loved it so much it was hard to move on. And while I waited for a chance to buy book 3 and Interlude, I gobbled up everything else related to the series that I could. The manga was only just starting, I didn't like dramaCDs (but I'm in the minority), and the drama somehow revived my love for watching Jdramas, even though I thought that this part of my fandom life has been over for years. When the second season started airing, I made a new friend in the Russian-speaking parts of the Internet who was even more obsessed with MBM than I am, and we fangirled to our hearts' content. At some point I promised her to translate the big sex scene from the end of book 3 as a gift for all the talks. I did, and since back then there was nothing for book 3 in any European language, as far as I know, I decided to post it online and give a link to English-speaking UtsuKare fans too. And since Wattpad doesn't allow copying text, and the browser translator feature from Google Translate was really inadequate, I also put up a link to the translation made with Deepl. As far as machine translators go, it is noticeably more comprehensible, and I didn't have the time (or skills to do the book justice, really) to translate it to English myself. Anyway, after this excerpt I thought I could manage one more important scene from book 3, then one more, and then I finally gave up and started translating it properly from the beginning. I also started correcting mistranslations in Deepl-versions that I kept doing for English readers, so some parts of the book are now much more readable than others. Now the third and the second book are done and I started to work on book 4, Mamanaranai Kare that was published in Japan at the end of October 2024. I also translated several stories from Interlude and plan to do at least one more, and maybe some others for some holidays.

So here are the links to everything I've translated from My Beautiful Man book series:

Book 3 "Nayamashii Kare" which continues the story past the movie (completed). The text is in Russian, but there are links to decent machine translations to English at the beginning of each part (I've also run through most of them and corrected the mistranslations). Or you can use the in-browser translation feature, but the results would be less readable.

Book 2 "Nikurashii Kare" which was technically turned into season 2 of the drama and the movie, but the script has deviated so much from the book, at times it's like a completely different story (completed). I don't make Deepl translations for this since the official English release finally came out in December.

Stories from the Interlude. A number of stand-alone stories from the collection of them called "Interlude". The book has a total of 15 stories, and I probably won't translate it in its entirety, but I've dones the ones that I personally liked. One of them had also been translated to English by Mauli before, but I didn't use her version when working on mine. The rest of the stories have never been translated by anyone else, as far as I know. These, too, have links to Deepl-versions at the beginning.

Book 4 "Mamanaranai Kare" continues where book 3 left off. Ongoing. Usually I can manage at least one update per month, sometimes more, and I plan to divide the whole book into 12 parts. Each part will have a link to an English version which is machine-translated but with all mistranslations corrected by me.

Disclaimer: my Japanese is not yet really on a level good enough to translate fiction, and there are bound to be mistranslations even if you read the original Russian versions. But I'm cross-checking myself on everything to try and keep those mistakes to minor things. I also know how to translate so I made sure that the text flows well, doesn't feel choppy and retains the same vibe that I get from reading the original.

66 notes

·

View notes

Text

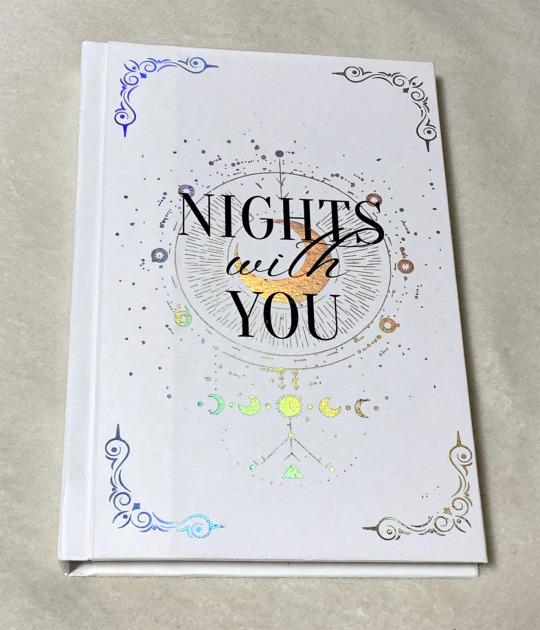

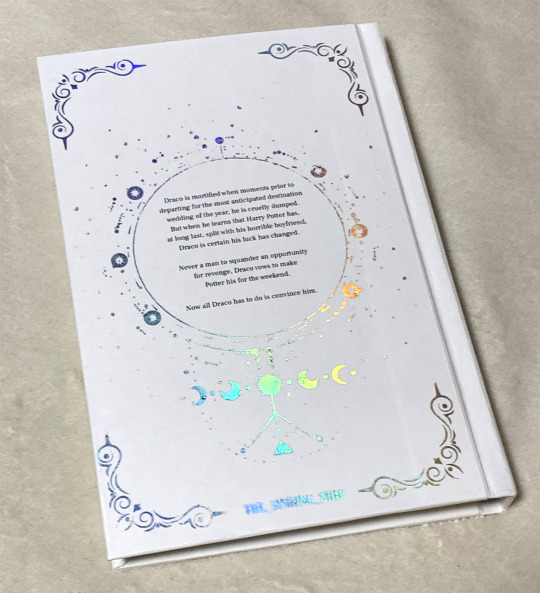

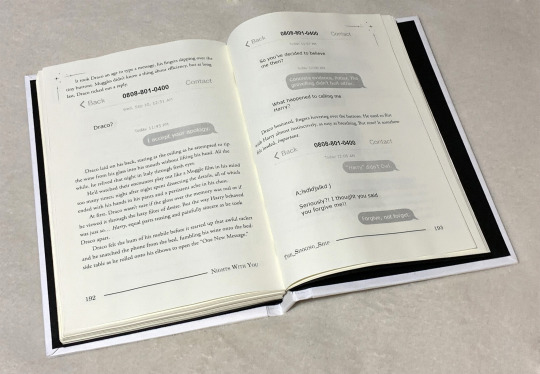

Nights With You by The_Sinking_Ship

The second fic I ever bound, I read this on a whim one day and just knew I needed to bind it!

As you might be able to tell by the photos, I was still struggling a lot with my foiling, especially on the author's tag on the back 😭

I also very clearly had to work on my measurements, seeing as I did not have a printer that could print edge to edge I had to calculated how much I needed to resize it to print on a 1:1 scale.

I thankfully learned a way to add proper crop marks later on as well which has helped me immensely with placing the covers on properly!

As always, the cover is all made with Kent paper since I don't own a cutting machine or able to find any decent book cloth where I live... 🙃

This was also the first time I experimented with adding text messages in the fic, and I think it's really fun!

Sadly, this was my old printer that was only able to print black and white and the quality wasn't the best so the grey bubbles are barely visible, but at least it's still readable.

I think I might want to redo this bind later on, especially since I got a laser printer that can print in color now!

#drarry#bookbinding#ficbinding#fanbinding#harry x draco#fanfic binding#drarry fic#tsurashi-bindery#book binding#fic binding#harry potter

104 notes

·

View notes

Text

Write with your heart, edit with your inner critic and ears

My last two post have been more from the heart, but I think more people need actual editing advice like I have talked about in a previous thought post. Like I said there, plenty of teachers and professors have no issues talking about writing and formulating and drafting works. I only know of one professor over all my years of writing that I can recall with 99% certainty that taught us useful tips for editing.

And I will say that like writing, there's no one size fits all, because nobody writes the same as anyone else--or even themselves as they hone and improve their skills. A lot should be based on what vibe you want to create and what is needed for the work/chapter/draft you are focusing on.

But if nothing else, there's three things you want to focus on:

Spelling and punctuation as fitting for the message you want to convey

Formatting to make it readable and to improve the message

And most importantly: READING THE WORK ALOUD BEFORE PUBLISHING

The last one is what I don't see talked about enough, and was reminded by a community post recommended for me (I will never follow them, they're impossible to share and most seem like a waste of time), so I decided to expand on it in a more shareable format.

(Note: I believe that doing this with your own voice or text to speech are equally helpful. Sometimes you want to go at your own pace and use voices. Maybe you have to have another voice to notice things. Both work to reach the same goal of polishing your writing!)

I am going start by saying that there's times when you can tell a piece could have used an extra pass by how it reads. There's often weird turn of phrases and spelling that is not obtrusive but makes you pause (and I will be the first to say I want readers to tell me if they catch these for whatever reason, even the less error prone machine still lets flecks of misspellings and pebbles of forgotten commas). The missing period that makes two sentences become a long run-on sentence that technically makes sense.

The editing is on the surface sufficient, but missing a last pass that could buff out a final draft and have you read it later when more experienced with pride. And without asking why you forgot something so obvious, or why you worded it that way. (I love looking at outstanding line from old works, I often laugh at how even my spicy stuff can have a raw scene outside of the bedroom).

One of the best ways I found, after formatting and spellchecking and double-checking the right words are used, is to give it a final read out loud! Yes, I can understand that you might ask why it has to be out loud when you already went over it so many times, and the editing software and you not say it's fine. And that's exactly why. Things that a computer might say is good might not be the right wording for the story. You might have changed something to get the sentence to make sense but it ruined the actual mood, or you added something that is out of character. An extra adjective you thought worked didn't actually fit and you forgot to hit delete. There's so many times I have changed something because I wasn't satisfied with the flow and just didn't backspace enough during a much earlier draft and it slipped through till the final pass while I read it aloud.

Or--and this is a big one for me--reading it out loud made me realize that it won't work at all. Not due to anything technical or because the section makes no sense. Just something about having it spoken out loud awakens my inner critic to an issue I didn't notice until now. A whole paragraph might finally show it can be cut and make a transition easier. A sentence might be deleted because it was more distracting fluff and I see it should be deleted (no matter how nice it worked in my head). Or if not gone, it was in the wrong spot. Now I have to read it out loud, word by word, I can paste a section in a better place and change the whole flow.

I've seen people talk about how they use text to speech to see if something goes on for too long and know when to stop by how it starts to distort it's voice to keep going. You can do the same too.

By reading aloud, you'll at last understand the readers' plight of flowery purple prose by struggling to catch your breath. Suffer while the TTS malfunctions from you using too many adjectives about the MC in the mirror. See if maybe you can change that comma or em dash into a period or semicolon. Play with different voices and see if that fanfic about your favorite character sounds OOC or you are really that good at getting inside their head. See how the words fit as you get a fresh perspective, watching them fall into place like puzzle pieces while observing how each flows like a poetic melody.

It is all experience for when you write that next chapter or work. Sure, it's not as intense as typing it all out, but it's not like you're not learning from it. Editing is writing too; what you master here can translate to better writing next time, and a cleaner first draft in the future. (Like I've said before, you'll never have a perfect first draft, but you can write a clean first draft to make it easier for yourself.)

Just remember, writing is supposed to be as good as you are today, and never a suffering contest. Not every aspect of it will be exciting but do not force yourself to make it more difficult than you can handle. If I am miserable, I am not creative, and this is true for so many; and if anyone tells you that makes someone a fake/poser/imposter, ignore them. If they like being sad to write a depressed and angsty character, that is their method alone. Editing does not have to be a slog, but it should be something you put effort in to so the final product is something you are proud of.

Listen to the fun writing voice when you are outlining and writing, and unleash the inner critic when you edit. Both are there for a reason.

#writing#writeblr#writers on tumblr#writerscommunity#creative writing#writing advice#writing tips#editing tips#inner critic#The inner critic is good for editing#Do not trust the inner critic for writing#It wants to twist words not play like your writing brain

23 notes

·

View notes

Text

it's time for the long-threatened post about how to get subtitles (including translated ones) for videos that don't have subtitles.

in my experience, the methods in this post can probably get you solidly 75% or more of the content of many videos (caveats inside). i've tested this on videos that are originally in chinese, english, french, german, hindi, japanese, korean, spanish, and honestly probably some languages that i'm forgetting. my experience is that it works adequately in all of them. not great, necessarily, but well enough that you can probably follow along.

this is a very long post because this is the overexplaining things website, and because i talk about several different ways to get the captions. this isn't actually difficult, though, or even especially time consuming—the worst of it is pushing a button and ignoring things for a while. actual hands-on work is probably five minutes tops, no matter how long the video is.

i've attempted to format this post understandably, and i hope it's useful to someone.

first up, some disclaimers.

this is just my experience with things, and your experience might be different. the tools used for (and available for) this kind of thing change all the time, and if you're reading this six months after i wrote it, your options might be different. this post is probably still a decent starting place.

background about my biases in this: i work in the creative industries. mostly i'm a fiction editor. i've also been a writer, a technical editor and writer, a transcriptionist, a copyeditor, and something i've seen called a 'translation facilitator' or 'rewrite editor', where something is translated fairly literally (by a person or a machine) and then a native speaker of the target language goes through and rewrites/restructures as needed to make the piece read more naturally in the target language. i've needed to get information out of business meetings that were conducted in a language i didn't speak, and have done a lot of work on things that were written in (or translated into) the writer's second or sixth language, but needed to be presented in natural english.

so to start, most importantly: machine translation is never going to be as good as a translation done by an actual human. human translators can reflect cultural context and nuanced meanings and the artistry of the work in a way that machines will never be able to emulate. that said, if machine translation is your only option, it's better than nothing. i also find it really useful for videos in languages where i have enough knowledge that i'm like, 75% sure that i'm mostly following, and just want something that i can glance at to confirm that.

creating subs like this relies heavily on voice-to-text, which—unfortunately—works a lot better in some situations than it does in others. you'll get the best, cleanest results from videos that have slow, clear speech in a 'neutral' accent, and only one person speaking at a time. (most scripted programs fall into this category, as do many vlogs and single-person interviews.) the results will get worse as voices speed up, overlap more, and vary in volume. that said, i've used this to get captions for cast concerts, reality shows, and variety shows, and the results are imperfect but solidly readable, especially if you have an idea of what's happening in the plot and/or can follow along even a little in the broadcast language.

this also works best when most of the video is in a single language, and you select that language first. the auto detect option sometimes works totally fine, but in my experience there's a nonzero chance that it'll at least occasionally start 'detecting' random other languages in correctly, or someone will say a few words in spanish or whatever, but the automatic detection engine will keep trying to translate from spanish for another three minutes, even tho everything's actually in korean. if there's any way to do so, select the primary language, even if it means that you miss a couple sentences that are in a different language.

two places where these techniques don't work, or don't work without a lot of manual effort on your part: translating words that appear on the screen (introductions, captions, little textual asides, etc), and music. if you're incredibly dedicated, you can do this and add it manually yourself, but honestly, i'm not usually this dedicated. getting captions for the words on the screen will involve either actually editing the video or adding manually translated content to the subs, which is annoying, and lyrics are...complicated. it's possible, and i'm happy to talk about it in another post if anyone is interested, but for the sake of this post, let's call it out of scope, ok? ok. bring up the lyrics on your phone and call it good enough.

places where these techniques are not great: names. it's bad with names. names are going to be mangled. resign yourself to it now. also, in languages that don't have strongly gendered speech, you're going to learn some real fun stuff about the way that the algorithms gender things. (spoiler: not actually fun.) bengali, chinese, and turkish are at least moderately well supported for voice-to-text, but you will get weird pronouns about it.

obligatory caveat about ai and voice-to-text functionality. as far as i'm aware, basically every voice-to-text function is ~ai powered~. i, a person who has spent twenty years working in the creative industries, have a lot of hate for generative ai, and i'm sure that many of you do, too. however, if voice-to-text (or machine translation software) that doesn't rely on it exists anymore, i'm not aware of it.

what we're doing here is the same as what douyin/tiktok/your phone's voice-to-text does, using the same sorts of technology. i mention this because if you look at the tools mentioned in this post, at least some of them will be like 'our great ai stuff lets you transcribe things accurately', and i want you to know why. chat gpt (etc) are basically glorified predictive text, right? so for questions, they're fucking useless, but for things like machine transcription and machine translation, those predictions make it more likely that you get the correct words for things that could have multiple translations, or for words that the software can only partially make out. it's what enables 'he has muscles' vs 'he has mussels', even though muscles and mussels are generally pronounced the same way. i am old enough to have used voice to text back when it was called dictation software, and must grudgingly admit that this is, in fact, much better.

ok! disclaimers over.

let's talk about getting videos

for the most part, this post will assume that you have a video file and nothing else. cobalt.tools is the easiest way i'm aware of to download videos from most sources, though there are other (more robust) options if you're happy to do it from the command line. i assume most people are not, and if you are, you probably don't need this guide anyhow.

i'm going to use 'youtube' as the default 'get a video from' place, but generally speaking, most of this works with basically any source that you can figure out how to download from—your bilibili downloads and torrents and whatever else will work the same way. i'm shorthanding things because this post is already so so long.

if the video you're using has any official (not autogenerated) subtitles that aren't burned in, grab that file, too, regardless of the language. starting from something that a human eye has looked over at some point is always going to give you better results. cobalt.tools doesn't pull subtitles, but plugging the video url into downsub or getsubs and then downloading the srt option is an easy way to get them for most places. (if you use downsub, it'll suggest that you download the full video with subtitles. that's a link to some other software, and i've never used it, so i'm not recommending it one way or the other. the srts are legit, tho.)

the subtitle downloaders also have auto translation options, and they're often (not always) no worse than anything else that we're going to do here—try them and see if they're good enough for your purposes. unfortunately, this only works for things that already have subtitles, which is…not that many things, honestly. so let's move on.

force-translating, lowest stress mode.

this first option is kind of a cheat, but who cares. youtube will auto-caption things in some languages (not you, chinese) assuming that the uploader has enabled it. as ever, the quality is kinda variable, and the likelihood that it's enabled at all seems to vary widely, but if it is, you're in for a much easier time of things, because you turn it on, select whatever language you want it translated to, and youtube…does its best, anyhow.

if you're a weird media hoarder like me and you want to download the autogenerated captions, the best tool that i've found for this is hyprscribr. plug in the video url, select 'download captions via caption grabber', then go to the .srt data tab, copy it out, and paste it into a text file. save this as [name of downloaded video].[language code].srt, and now you have captions! …that you need to translate, which is actually easy. if it's a short video, just grab the text, throw it in google translate (timestamps and all), and then paste the output into a new text file. so if you downloaded cooking.mp4, which is in french, you'll have three files: cooking.mp4, cooking.fr.srt, and cooking.en.srt. this one's done! it's easy! you're free!

but yeah, ok, most stuff isn't quite that easy, and auto-captioning has to be enabled, and it has some very obvious gaps in the langauges it supports. which is sort of weird, because my phone actually has pretty great multilingual support, even for things that youtube does not. which brings us to low-stress force translation option two.

use your phone

this seems a little obvious, but i've surprised several people with this information recently, so just in case. for this option, you don't even need to have downloaded the video—if it's a video you can play on your phone, the phone will almost definitely attempt real-time translation for you. i'm sure iphones have this ability, but i'm an android person, so can only provide directions for that: go into settings and search for (and enable) live translation. the phone will do its best to pick up what's being said and translate it on the fly for you, and if 'what's being said' is a random video on the internet, your phone isn't gonna ask questions. somewhat inexplicably, this works even if the video is muted. i do this a lot at like four a.m. when i'm too lazy to grab earbuds but don't want to wake up my wife.

this is the single least efficient way to force sub/translate things, in my opinion, but it's fast and easy, and really useful for those videos that are like a minute long and probably not that interesting, but like…what if it is, you know? sometimes i'll do this to decide if i'm going to bother more complicated ways of translating things.

similarly—and i feel silly even mentioning this, but that i didn't think of it for an embarrassingly long time—if you're watching something on a device with speakers, you can try just…opening the 'translate' app on your phone. they all accept voice input. like before, it'll translate whatever it picks up.

neither of these methods are especially useful for longer videos, and in my experience, the phone-translation option generally gives the least accurate translation, because in attempting to do things in real time, you lose some of the predicative ability that i was talking about earlier. (filling in the blank for 'he has [muscles/mussels]' is a lot harder if you don't know if the next sentence is about the gym or about dinner.)

one more lazy way

this is more work than the last few options, but often gives better results. with not much effort, you can feed a video playing on your computer directly into google translate. there's a youtube video by yosef k that explains it very quickly and clearly. this will probably give you better translation output than any of the on-the-fly phone things described above, but it won't give you something that you can use as actual subs—it just produces text output that you can read while you watch the video. again, though, really useful for things that you're not totally convinced you care about, or for things where there aren't a lot of visuals, or for stuff where you don't care about keeping your eyes glued to the screen.

but probably you want to watch stuff on the screen at the same time.

let's talk about capcut!

this is probably not a new one for most people, but using it like this is a little weird, so here we go. ahead of time: i'm doing this on an actual computer. i think you probably can do it on your phone, but i have no idea how, and honestly this is already a really long guide so i'm not going to figure it out right now. download capcut and put it on an actual computer. i'm sorry.

anyhow. open up capcut, click new project. import the file that you downloaded, and then drag it down to the editing area. go over to captions, auto captions, and select the spoken language. if you want bilingual captions, pick the language for that, as well, and the captions will be auto-translated into whatever the second language you choose is. (more notes on this later.)

if i remember right, this is the point at which you get told that you can't caption a video that's more than an hour long. however. you have video editing software, and it is open. split the video in two pieces and caption them separately. problem solved.

now the complicated part: saving these subs. (don't panic; it's not actually that complicated.) as everyone is probably aware, exporting captions is a premium feature, and i dunno about the rest of you, but i'm unemployed, so let's assume that's not gonna happen.

the good news is that since you've generated the captions, they're already saved to your computer, they're just kinda secret right now. there are a couple ways to dig them out, but the easiest i'm aware of is the biyaoyun srt generator. you'll have to select the draft file of your project, which is auto-saved once a minute or something. the website tells you where the file is saved by default on your computer. (i realised after writing this entire post that they also have a step-by-step tutorial on how to generate the subtitles, with pictures, so if you're feeling lost, you can check that out here.)

select the project file titled 'draft_content', then click generate. you want the file name to be the same as the video name, and again, i'd suggest srt format, because it seems to be more broadly compatible with media players. click 'save to local' and you now have a subtitle file!

translating your subtitles

you probably still need to translate the subtitles. there are plenty of auto-translation options out there. many of them are fee- or subscription-based, or allow a very limited number of characters, or are like 'we provide amazing free translations' and then in the fine print it says that they provide these translations through the magic of uhhhh google translate. so we're just going to skip to google translate, which has the bonus of being widely available and free.

for shorter video, or one that doesn't have a ton of spoken stuff, you can just copy/paste the contents of the .srt file into the translation software of your choice. the web version of google translate will do 5000 characters in one go, as will systran. that's the most generous allocation that i'm aware of, and will usually get you a couple minutes of video.

the timestamps eat up a ton of characters, though, so for anything longer than a couple minutes, it's easier to upload the whole thing, and google translate is the best for that, because it is, to my knowledge, the only service that allows you to do it. to upload the whole file, you need a .doc or .rtf file.

an .srt file is basically just a text file, so you can just open it in word (or gdocs or whatever), save it as a .doc, and then feed it through google translate. download the output, open it, and save it as an .srt.

you're done! you now have your video and a subtitle file in the language of your choice.

time for vibe, the last option in this post.

vibe is a transcription app (not a sex thing, even tho it sounds like one), and it will also auto-translate the transcribed words to english, if you want.

open vibe and select your file, then select the language. if you want it translated to english, hit advanced and toggle 'translate to english'. click translate and wait a while. after a few minutes (or longer, depending on how long the file is), you'll get the text. the save icon is a folder with a down arrow on it, and i understand why people are moving away from tiny floppy disks, but also: i hate it. anyhow, save the output, and now you have your subs file, which you can translate or edit or whatever, as desired.

vibe and capcom sometimes get very different results. vibe seems to be a little bit better at picking up overlapping speech, or speech when there are other noises happening; capcom seems to be better at getting all the worlds in a sentence. i feel like capcom maybe has a slightly better translation engine, of the two of them, but i usually end up just doing the translation separately. again, it can be worth trying both ways and seeing which gives better results.

special notes about dual/bilingual subs

first: i know that bilingual subs are controversial. if you think they're bad, you don't have to use them! just skip this section.

as with everything else, automatically generating gives mixed results. sometimes the translations are great, and sometimes they're not. i like having dual subs, but for stuff that Matters To Me, for whatever reason, i'll usually generate both just the original and a bilingual version, and then try some other translation methods on the original or parts thereof to see what works best.

not everything displays bilingual subs very well. plex and windows media player both work great, vlc and the default video handler on ubuntu only display whatever the first language is, etc. i'm guessing that if you want dual subbed stuff you already have a system for it.

i'll also point out that if you want dual subs and have gone a route other than capcom, you can create dual subs by pasting the translated version and the untranslated version into a single file. leave the timestamps as they are, delete the line numbers if there are any (sometimes they seem to cause problems when you have dual subs, and i haven't figured out why) and then literally just paste the whole sub file for the first language into a new file. then paste in the whole sub file for the second language. yes, as a single chunk, the whole thing, right under the first language's subs. save the file as [video name].[zh-en].srt (or whatever), and use it like any other sub file.

notes on translation, especially since we're talking about lengthy machine-translations of things.

i default to translation options that allow for translating in large chunks, mostly because i'm lazy. but since an .srt is, again, literally just a text file, they're easy to edit, and if you feel like some of the lines are weird or questionable or whatever, it's easy to change them if you can find a better translation.

so: some fast notes on machine translation options, because i don't know how much time most people spend thinking about this kind of stuff.

one sort of interesting thing to check out is the bing translator. it'll only do 1000 characters at once, but offers the rather interesting option of picking a level of formality. i can't always get it to work, mind, but it's useful especially for times when you're like 'this one line sounds weird'—sometimes the difference between what the translator feels is standard vs formal vs casual english will make a big difference.

very fast illustration of the difference in translations. the random video that i used to make sure i didn't miss any steps explaining things starts with '所以你第二季来'. here's how it got translated:

google: So you come to season 2

google's top alternative: So you come in the second season

bing's standard tone: So here you come for the second season

bing set to casual: So you're coming for the second season, huh?

reverso default guess: So you come in season two

reverso alternate guess: You'll be participating in season two

capcom: So you come in season two

yandex: So you come in the second season

systran: That's why you come in season two

deepl: That's why you're here in season two

vibe: So your second season is here

technically all conveying the same information, but the vibes are very different. sometimes one translator or another will give you a clearly superior translation, so if you feel like the results you're getting are kinda crap, try running a handful of lines through another option and see if it's better.

ok! this was an incredibly long post, and i've almost definitely explained something poorly. again, there are almost certainly better ways to do this, but these ways are free and mostly effective, and they work most of the time, and are better than nothing.

feel free to ask questions and i'll answer as best i can. (the answer to any questions about macs or iphones is 'i'm so sorry, i have no idea tho.' please do not ask those questions.)

#i'm so excited to find out what i totally failed to explain because i'm sure there's something#subtitles#i really do want to reiterate that this is VERY FAR from a perfect system#but it's better than nothing#i assume that we all dream of having at least fluent comprehension of basically every language#but here in the real world...#y'know.#echoes linger

95 notes

·

View notes

Text

Historical Preoccupations

Hi!

So I decided a few years ago that my history + geography knowledge was dreadful and I wanted to work on it, and I've been doing that slowly. But after getting into the Nine Worlds books by Victoria Goddard, I've been reading a lot about the history of the Pacific Islands, Polynesia, and the Pacific Ocean generally, as well as related topics.

I approach everything I read with a certain amount of caution, as I am not a historian (and don't have all the tools to mind to figure out how reliable my sources are, especially as I am in the UK and most of them are not coming from the actual area in question), but it's been an enjoyable ride so far!

I thought I'd throw together a list of all the things I've been reading / have on my to read shelf with some thoughts on them. I can mostly only tell you about how readable/accessible/interesting the text is, so please don't take this as any comment on accuracy/lack of bias.

I'm making a pinned post for my tumblr that will link to this, and I'm going to try and update it as I read things.

Sea People by Christina Thompson

This was the first one I read, and I really enjoyed it! I think there's a bit of a light touch on the impact of colonialism, but her writing style is very easy to read and I found the way she approached the history very helpful. She does start with European contact, but she goes through each point in history and what they thought the history of Polynesia was and why, with what their biases brought to it. Which was fascinating!

Voyagers by Nicholas Thomas

This was a drier read than the Thompson, but it covered roughly the same historical span and helped add a different angle in a few places. The illustrations/photos were very helpful, too, and it's broken up into small enough sections to keep it moving.

Blue Machine by Helen Czerski

A slight step to the left, topic-wise - this is about how the ocean works, how it effects the world, and how people and animals use it. It opens and closes with the author's time sailing near Hawai'i on an outrigger canoe, and while some of the science went over my head, most of it was really interesting and gave me a much more layered picture of what's going on in all that water.

Pacific by Philip J. Hatfield

This is a beautifully illustrated book that is going through the history of the area in small slices. It's very readable, and has been a good overview that overlapped with some of my previous reading and helped me to solidify some of the knowledge I've been learning in my mind.

A Brief History of the Pacific by Jeremy Black

Disappointing. While the author was much more confident tackling the world wars (upon investigation, military history appears to be his area of academic speciality), I found his attitude towards the earlier histories (particularly the overly defensive and outright weird introduction to the section on Cook) made me rather suspicious of his knowledge on the subject. It's also badly organised and poorly written - like going through a junk drawer of semi-academic writing. Not at all what I'd hoped from the way it was pitched.

Pacific Art in Detail by Jenny Newell

Really enjoyed this one, and I think I would have even without having read it directly after being at the British Museum. Newell writes accessibly and clearly without talking down to the reader, and explores items from Oceania held by the British Museum one at a time, organised by broad themes (Art of Dance and Art of Change were two I found particularly interesting). I was glad to read it before getting to...

Oceania: The Shape of Time by Maia Nuku

The first art book I've ever owned! I'm only at the beginning of this so I'll update this description when I finish. But so far it's written in a somewhat denser and more academic style than Pacific Art in Detail, and is a good way to dig deeper into the subject and look through items held by the Metropolitan Museum of Art, again grouped loosely by theme. And it's a beautifully made book, heavy on the photographs and images.

Upcoming reads:

Sailing Alone by Richard J. King

Another slight step to one side, this is a collection of stories of solo-sails seems fascinating - hopefully it's as interesting as it looks!

Waves Across the South by Sujit Sivasundaram

I'm somewhat intimidated by this one because it's ~500 pages, but hopefully I can tackle it this year, because it does sound really interesting, and like it's going to go into some greater socio-political depth than my previous reads. Fingers crossed!

Under consideration:

I'm not letting myself buy any more until I've caught up, but these are some of the other titles I know about (and am eyeing with varying degrees of interest - I definitely want the Low, though I'm currently having trouble sourcing it).

The Happy Isles of Oceania by Paul Theroux

Hawaiki Rising by Sam Low

Come on Shore and We Will Kill and Eat You All by Christina Thompson

Wayfinding by M R O'Connor

Wayfinding by Michael Bond

I'd love to hear any suggestions of titles on the topic, particularly anything from Polynesian authors!

23 notes

·

View notes

Text

The Vesuvius Challenge--a $700,000 prize for being able to recover readable text from the 2,000 year old carbonized scrolls of the Herculaenum Library--has paid off in a huge way, recovering a substantial portion of text from one of the scrolls. I can't think of a more exciting discovery in the field of paleography or ancient literature this century than figuring out how to read these texts. It's also a very cool application of machine learning: using a cracking pattern to identify spots on the 3d reconstruction of the manuscripts that once held ink, training a ML model to scan the surface of the papyrus for ink-vs-no-ink, and using the data points it gathered to assemble a complete picture.

I recommend clicking through and reading about the technology that went into this. It's very interesting, and even though it makes use of some pretty crazy cutting-edge techniques, it seems like it still requires a phenomenal amount of time and effort to make it work.

The next prizes are for scaling up progress, to read multiple entire scrolls--primarily by speeding up the process of mapping the scrolls themselves, which is still a mostly manual process.

87 notes

·

View notes

Text

Whenever I see danmei discourse reliant on translations, especially where translator interpretations differ, it's so clear that a lot of English-speaking danmei fans truly believe, consciously or unconsciously, that translation is a plug-and-play, one-for-one prospect - that languages are interchangeable and there is an Exact One True Translation that the Perfect Translator will be able to unearth and provide to the masses that ideally encapsulates the original using accurate words.

I suspect this is a venn diagram in the shape of a circle with people who are English monolingual.

I also suspect this is a venn diagram in the shape of a circle with people who think their own interpretation of a text is Truth instead of one possible reading.

Like, even when we read a book in the language it was originally in, there isn't only one true meaning - different people will interpret it differently.

That is magnified when we can only access the text via translation - translation can never be a perfect art because languages aren't one-for-one and English doesn't have words or phrases for every concept in every other language (no two languages do!) and translators have to take what's in the original, interpret it as best they can through their own lens, then convert that into another language while trying to preserve the meaning, the intent, the nuance, the lyricism, and give the secondary language reader something readable, polished, and eloquent.

It cannot be done perfectly.

Having access to multiple translations that differ is a strength, not a weakness - a window into the nuance that is lost in any one translation, a glimpse at how complex and beautiful the original language is.

But there will never be One True Translation, anymore than there will ever be One True Interpretation. That's. That's not how books work.

I am begging English-speaking danmei fans to wrap theirs heads around this, and maybe attempt to study even a little Chinese before opening their mouths about the translation aspect of these books.

If I never see another "I plugged the hanzi of this characters name into a machine translator and they literally mean this hahaha" post again it'll be too soon. If I never see another "this version of the translation is Wrong I will use the version that narrowly supports my personal interpretation" post again it will be too soon. Like. Uggggh.

(This wasn't prompted by anything I saw recently/today it's just a constant low-level annoyance.)

#unforth rambles#and this is why ive been studying chinese for three years#i just want to get to the source and be able to interpret it for myself#but the vast majority of people in english fandom seem to have zero interest in learning chinese#its baffling to me tbh#to see the same people quibbling over translation have zero understanding of how translation works#and zero interest in learning the language so they dont have to rely on translation

41 notes

·

View notes